In today’s rapidly changing world, monitoring the health of our planet’s vegetation is more critical than ever. Vegetation plays a crucial role in maintaining an ecological balance, providing sustenance, and acting as a carbon sink. Traditionally, monitoring vegetation health has been a daunting task. Methods such as field surveys and manual satellite data analysis are not only time-consuming, but also require significant resources and domain expertise. These traditional approaches are cumbersome. This often leads to delays in data collection and analysis, making it difficult to track and respond swiftly to environmental changes. Furthermore, the high costs associated with these methods limit their accessibility and frequency, hindering comprehensive and ongoing global vegetation monitoring efforts at a planetary scale. In light of these challenges, we have developed an innovative solution to streamline and enhance the efficiency of vegetation monitoring processes on a global scale.

Transitioning from the traditional, labor-intensive methods of monitoring vegetation health, Amazon SageMaker geospatial capabilities offer a streamlined, cost-effective solution. Amazon SageMaker supports geospatial machine learning (ML) capabilities, allowing data scientists and ML engineers to build, train, and deploy ML models using geospatial data. These geospatial capabilities open up a new world of possibilities for environmental monitoring. With SageMaker, users can access a wide array of geospatial datasets, efficiently process and enrich this data, and accelerate their development timelines. Tasks that previously took days or even weeks to accomplish can now be done in a fraction of the time.

In this post, we demonstrate the power of SageMaker geospatial capabilities by mapping the world’s vegetation in under 20 minutes. This example not only highlights the efficiency of SageMaker, but also its impact how geospatial ML can be used to monitor the environment for sustainability and conservation purposes.

Identify areas of interest

We begin by illustrating how SageMaker can be applied to analyze geospatial data at a global scale. To get started, we follow the steps outlined in Getting Started with Amazon SageMaker geospatial capabilities. We start with the specification of the geographical coordinates that define a bounding box covering the areas of interest. This bounding box acts as a filter to select only the relevant satellite images that cover the Earth’s land masses.

Data acquisition

SageMaker geospatial capabilities provide access to a wide range of public geospatial datasets, including Sentinel-2, Landsat 8, Copernicus DEM, and NAIP. For our vegetation mapping project, we’ve selected Sentinel-2 for its global coverage and update frequency. The Sentinel-2 satellite captures images of Earth’s land surface at a resolution of 10 meters every 5 days. We pick the first week of December 2023 in this example. To make sure we cover most of the visible earth surface, we filter for images with less than 10% cloud coverage. This way, our analysis is based on clear and reliable imagery.

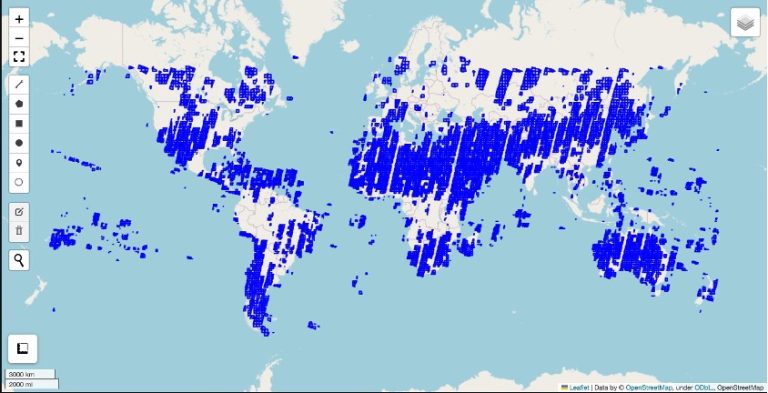

By utilizing the search_raster_data_collection function from SageMaker geospatial, we identified 8,581 unique Sentinel-2 images taken in the first week of December 2023. To validate the accuracy in our selection, we plotted the footprints of these images on a map, confirming that we had the correct images for our analysis.

SageMaker geospatial processing jobs

When querying data with SageMaker geospatial capabilities, we received comprehensive details about our target images, including the data footprint, properties around spectral bands, and hyperlinks for direct access. With these hyperlinks, we can bypass traditional memory and storage-intensive methods of first downloading and subsequently processing images locally—a task made even more daunting by the size and scale of our dataset, spanning over 4 TB. Each of the 8,000 images are large in size, have multiple channels, and are individually sized at approximately 500 MB. Processing multiple terabytes of data on a single machine would be time-prohibitive. Although setting up a processing cluster is an alternative, it introduces its own set of complexities, from data distribution to infrastructure management. SageMaker geospatial streamlines this with Amazon SageMaker Processing. We use the purpose-built geospatial container with SageMaker Processing jobs for a simplified, managed experience to create and run a cluster. With just a few lines of code, you can scale out your geospatial workloads with SageMaker Processing jobs. You simply specify a script that defines your workload, the location of your geospatial data on Amazon Simple Storage Service (Amazon S3), and the geospatial container. SageMaker Processing provisions cluster resources for you to run city-, country-, or continent-scale geospatial ML workloads.

For our project, we’re using 25 clusters, with each cluster comprising 20 instances, to scale out our geospatial workload. Next, we divided the 8,581 images into 25 batches for efficient processing. Each batch contains approximately 340 images. These batches are then evenly distributed across the machines in a cluster. All batch manifests are uploaded to Amazon S3, ready for the processing job, so each segment is processed swiftly and efficiently.

With our input data ready, we now turn to the core analysis that will reveal insights into vegetation health through the Normalized Difference Vegetation Index (NDVI). NDVI is calculated from the difference between Near-infrared (NIR) and Red reflectances, normalized by their sum, yielding values that range from -1 to 1. Higher NDVI values signal dense, healthy vegetation, a value of zero indicates no vegetation, and negative values usually point to water bodies. This index serves as a critical tool for assessing vegetation health and distribution. The following is an example of what NDVI looks like.

Now we have the compute logic defined, we’re ready to start the geospatial SageMaker Processing job. This involves a straightforward three-step process: setting up the compute cluster, defining the computation specifics, and organizing the input and output details.

First, to set up the cluster, we decide on the number and type of instances required for the job, making sure they’re well-suited for geospatial data processing. The compute environment itself is prepared by selecting a geospatial image that comes with all commonly used packages for processing geospatial data.

Next, for the input, we use the previously created manifest that lists all image hyperlinks. We also designate an S3 location to save our results.

With these elements configured, we’re able to initiate multiple processing jobs at once, allowing them to operate concurrently for efficiency.

After you launch the job, SageMaker automatically spins up the required instances and configures the cluster to process the images listed in your input manifest. This entire setup operates seamlessly, without needing your hands-on management. To monitor and manage the processing jobs, you can use the SageMaker console. It offers real-time updates on the status and completion of your processing tasks. In our example, it took under 20 minutes to process all 8,581 images with 500 instances. The scalability of SageMaker allows for faster processing times if needed, simply by increasing the number of instances.

Conclusion

The power and efficiency of SageMaker geospatial capabilities have opened new doors for environmental monitoring, particularly in the realm of vegetation mapping. Through this example, we showcased how to process over 8,500 satellite images in less than 20 minutes. We not only demonstrated the technical feasibility, but also showcased the efficiency gains from using the cloud for environmental analysis. This approach illustrates a significant leap from traditional, resource-intensive methods to a more agile, scalable, and cost-effective approach. The flexibility to scale processing resources up or down as needed, combined with the ease of accessing and analyzing vast datasets, positions SageMaker as a transformative tool in the field of geospatial analysis. By simplifying the complexities associated with large-scale data processing, SageMaker enables scientists, researchers, and businesses stakeholders to focus more on deriving insights and less on infrastructure and data management.

As we look to the future, the integration of ML and geospatial analytics promises to further enhance our understanding of the planet’s ecological systems. The potential to monitor changes in real time, predict future trends, and respond with more informed decisions can significantly contribute to global conservation efforts. This example of vegetation mapping is just the beginning for running planetary-scale ML. See Amazon SageMaker geospatial capabilities to learn more.

About the Author

Xiong Zhou is a Senior Applied Scientist at AWS. He leads the science team for Amazon SageMaker geospatial capabilities. His current area of research includes LLM evaluation and data generation. In his spare time, he enjoys running, playing basketball and spending time with his family.

Xiong Zhou is a Senior Applied Scientist at AWS. He leads the science team for Amazon SageMaker geospatial capabilities. His current area of research includes LLM evaluation and data generation. In his spare time, he enjoys running, playing basketball and spending time with his family.

Anirudh Viswanathan is a Sr Product Manager, Technical – External Services with the SageMaker geospatial ML team. He holds a Masters in Robotics from Carnegie Mellon University, an MBA from the Wharton School of Business, and is named inventor on over 40 patents. He enjoys long-distance running, visiting art galleries and Broadway shows.

Anirudh Viswanathan is a Sr Product Manager, Technical – External Services with the SageMaker geospatial ML team. He holds a Masters in Robotics from Carnegie Mellon University, an MBA from the Wharton School of Business, and is named inventor on over 40 patents. He enjoys long-distance running, visiting art galleries and Broadway shows.

Janosch Woschitz is a Senior Solutions Architect at AWS, specializing in AI/ML. With over 15 years of experience, he supports customers globally in leveraging AI and ML for innovative solutions and building ML platforms on AWS. His expertise spans machine learning, data engineering, and scalable distributed systems, augmented by a strong background in software engineering and industry expertise in domains such as autonomous driving.

Janosch Woschitz is a Senior Solutions Architect at AWS, specializing in AI/ML. With over 15 years of experience, he supports customers globally in leveraging AI and ML for innovative solutions and building ML platforms on AWS. His expertise spans machine learning, data engineering, and scalable distributed systems, augmented by a strong background in software engineering and industry expertise in domains such as autonomous driving.

Li Erran Li is the applied science manager at humain-in-the-loop services, AWS AI, Amazon. His research interests are 3D deep learning, and vision and language representation learning. Previously he was a senior scientist at Alexa AI, the head of machine learning at Scale AI and the chief scientist at Pony.ai. Before that, he was with the perception team at Uber ATG and the machine learning platform team at Uber working on machine learning for autonomous driving, machine learning systems and strategic initiatives of AI. He started his career at Bell Labs and was adjunct professor at Columbia University. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized several workshops at NeurIPS, ICML, CVPR, ICCV on machine learning for autonomous driving, 3D vision and robotics, machine learning systems and adversarial machine learning. He has a PhD in computer science at Cornell University. He is an ACM Fellow and IEEE Fellow.

Li Erran Li is the applied science manager at humain-in-the-loop services, AWS AI, Amazon. His research interests are 3D deep learning, and vision and language representation learning. Previously he was a senior scientist at Alexa AI, the head of machine learning at Scale AI and the chief scientist at Pony.ai. Before that, he was with the perception team at Uber ATG and the machine learning platform team at Uber working on machine learning for autonomous driving, machine learning systems and strategic initiatives of AI. He started his career at Bell Labs and was adjunct professor at Columbia University. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized several workshops at NeurIPS, ICML, CVPR, ICCV on machine learning for autonomous driving, 3D vision and robotics, machine learning systems and adversarial machine learning. He has a PhD in computer science at Cornell University. He is an ACM Fellow and IEEE Fellow.

Amit Modi is the product leader for SageMaker MLOps, ML Governance, and Responsible AI at AWS. With over a decade of B2B experience, he builds scalable products and teams that drive innovation and deliver value to customers globally.

Amit Modi is the product leader for SageMaker MLOps, ML Governance, and Responsible AI at AWS. With over a decade of B2B experience, he builds scalable products and teams that drive innovation and deliver value to customers globally.

Kris Efland is a visionary technology leader with a successful track record in driving product innovation and growth for over 20 years. Kris has helped create new products including consumer electronics and enterprise software across many industries, at both startups and large companies. In his current role at Amazon Web Services (AWS), Kris leads the Geospatial AI/ML category. He works at the forefront of Amazon’s fastest-growing ML service, Amazon SageMaker, which serves over 100,000 customers worldwide. He recently led the launch of Amazon SageMaker’s new geospatial capabilities, a powerful set of tools that allow data scientists and machine learning engineers to build, train, and deploy ML models using satellite imagery, maps, and location data. Before joining AWS, Kris was the Head of Autonomous Vehicle (AV) Tools and AV Maps for Lyft, where he led the company’s autonomous mapping efforts and toolchain used to build and operate Lyft’s fleet of autonomous vehicles. He also served as the Director of Engineering at HERE Technologies and Nokia and has co-founded several startups..

Kris Efland is a visionary technology leader with a successful track record in driving product innovation and growth for over 20 years. Kris has helped create new products including consumer electronics and enterprise software across many industries, at both startups and large companies. In his current role at Amazon Web Services (AWS), Kris leads the Geospatial AI/ML category. He works at the forefront of Amazon’s fastest-growing ML service, Amazon SageMaker, which serves over 100,000 customers worldwide. He recently led the launch of Amazon SageMaker’s new geospatial capabilities, a powerful set of tools that allow data scientists and machine learning engineers to build, train, and deploy ML models using satellite imagery, maps, and location data. Before joining AWS, Kris was the Head of Autonomous Vehicle (AV) Tools and AV Maps for Lyft, where he led the company’s autonomous mapping efforts and toolchain used to build and operate Lyft’s fleet of autonomous vehicles. He also served as the Director of Engineering at HERE Technologies and Nokia and has co-founded several startups..