One of the key use cases for generative AI involves answering questions over private datasets, with retrieval-augmented generation (RAG) as the go-to framework. As new RAG techniques emerge, there’s a growing need to benchmark their performance across diverse datasets and metrics.

To meet this need, we’re introducing BenchmarkQED, a new suite of tools that automates RAG benchmarking at scale, available on GitHub (opens in new tab). It includes components for query generation, evaluation, and dataset preparation, each designed to support rigorous, reproducible testing.

BenchmarkQED complements the RAG methods in our open-source GraphRAG library, enabling users to run a GraphRAG-style evaluation across models, metrics, and datasets. GraphRAG uses a large language model (LLM) to generate and summarize entity-based knowledge graphs, producing more comprehensive and diverse answers than standard RAG for large-scale tasks.

In this post, we walk through the core components of BenchmarkQED that contribute to the overall benchmarking process. We also share some of the latest benchmark results comparing our LazyGraphRAG system to competing methods, including a vector-based RAG with a 1M-token context window, where the leading LazyGraphRAG configuration showed significant win rates across all combinations of quality metrics and query classes.

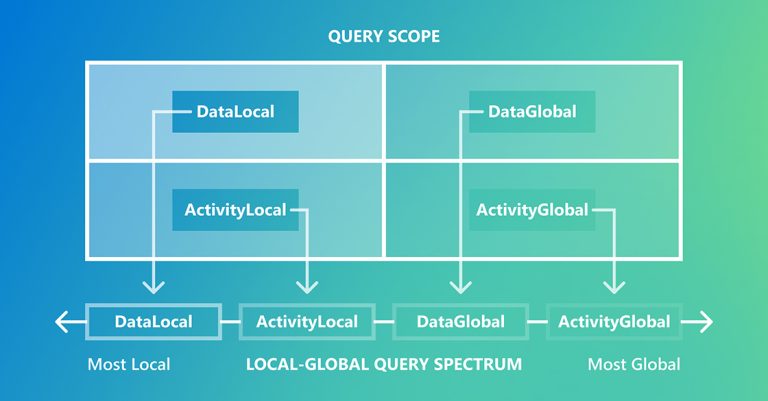

In the paper, we distinguish between local queries, where answers are found in a small number of text regions, and sometimes even a single region, and global queries, which require reasoning over large portions of or even the entire dataset.

Conventional vector-based RAG excels at local queries because the regions containing the answer to the query resemble the query itself and can be retrieved as the nearest neighbor in the vector space of text embeddings. However, it struggles with global questions, such as, “What are the main themes of the dataset?” which require understanding dataset qualities not explicitly stated in the text.

AutoQ: Automated query synthesis

This limitation motivated the development of GraphRAG a system designed to answer global queries. GraphRAG’s evaluation requirements subsequently led to the creation of AutoQ, a method for synthesizing these global queries for any dataset.

AutoQ extends this approach by generating synthetic queries across the spectrum of queries, from local to global. It defines four distinct classes based on the source and scope of the query (Figure 1, top) forming a logical progression along the spectrum (Figure 1, bottom).

AutoQ can be configured to generate any number and distribution of synthetic queries along these classes, enabling consistent benchmarking across datasets without requiring user customization. Figure 2 shows the synthesis process and sample queries from each class, using an AP News dataset.

Spotlight: AI-POWERED EXPERIENCE

Microsoft research copilot experience

Discover more about research at Microsoft through our AI-powered experience

AutoE: Automated evaluation framework

Our evaluation of GraphRAG focused on analyzing key qualities of answers to global questions. The following qualities were used for the current evaluation:

- Comprehensiveness: Does the answer address all relevant aspects of the question?

- Diversity: Does it present varied perspectives or insights?

- Empowerment: Does it help the reader understand and make informed judgments?

- Relevance: Does it address what the question is specifically asking?

The AutoE component scales evaluation of these qualities using the LLM-as-a-Judge method. It presents pairs of answers to an LLM, along with the query and target metric, in counterbalanced order. The model determines whether the first answer wins, loses, or ties with the second. Over a set of queries, whether from AutoQ or elsewhere, this produces win rates between competing methods. When ground truth is available, AutoE can also score answers on correctness, completeness, and related metrics.

An illustrative evaluation is shown in Figure 3. Using a dataset of 1,397 AP News articles on health and healthcare, AutoQ generated 50 queries per class (200 total). AutoE then compared LazyGraphRAG to a competing RAG method, running six trials per query across four metrics, using GPT-4.1 as a judge.

These trial-level results were aggregated using metric-based win rates, where each trial is scored 1 for a win, 0.5 for a tie, and 0 for a loss, and then averaged to calculate the overall win rate for each RAG method.

The four LazyGraphRAG conditions (LGR_b200_c200, LGR_b50_c200, LGR_b50_c600, LGR_b200_c200_mini) differ by query budget (b50, b200) and chunk size (c200, c600). All used GPT-4o mini for relevance tests and GPT-4o for query expansion (to five subqueries) and answer generation, except for LGR_b200_c200_mini, which used GPT-4o mini throughout.

Comparison systems were GraphRAG (Local, Global, and Drift Search), Vector RAG with 8k- and 120k-token windows, and three published methods: LightRAG (opens in new tab), RAPTOR (opens in new tab), and TREX (opens in new tab). All methods were limited to the same 8k tokens for answer generation. GraphRAG Global Search used level 2 of the community hierarchy.

LazyGraphRAG outperformed every comparison condition using the same generative model (GPT-4o), winning all 96 comparisons, with all but one reaching statistical significance. The best overall performance came from the larger budget, smaller chunk size configuration (LGR_b200_c200). For DataLocal queries, the smaller budget (LGR_b50_c200) performed slightly better, likely because fewer chunks were relevant. For ActivityLocal queries, the larger chunk size (LGR_b50_c600) had a slight edge, likely because longer chunks provide a more coherent context.

Competing methods performed relatively better on the query classes for which they were designed: GraphRAG Global for global queries, Vector RAG for local queries, and GraphRAG Drift Search, which combines both strategies, posed the strongest challenge overall.

Increasing Vector RAG’s context window from 8k to 120k tokens did not improve its performance compared to LazyGraphRAG. This raised the question of how LazyGraphRAG would perform against Vector RAG with 1-million token context window containing most of the dataset.

Figure 4 shows the follow-up experiment comparing LazyGraphRAG to Vector RAG using GPT-4.1 that enabled this comparison. Even against the 1M-token window, LazyGraphRAG achieved higher win rates across all comparisons, failing to reach significance only for the relevance of answers to DataLocal queries. These queries tend to benefit most from Vector RAG’s ranking of directly relevant chunks, making it hard for LazyGraphRAG to generate answers that have greater relevance to the query, even though these answers may be dramatically more comprehensive, diverse, and empowering overall.

AutoD: Automated data sampling and summarization

Text datasets have an underlying topical structure, but the depth, breadth, and connectivity of that structure can vary widely. This variability makes it difficult to evaluate RAG systems consistently, as results may reflect the idiosyncrasies of the dataset rather than the system’s general capabilities.

The AutoD component addresses this by sampling datasets to meet a target specification, defined by the number of topic clusters (breadth) and the number of samples per cluster (depth). This creates consistency across datasets, enabling more meaningful comparisons, as structurally aligned datasets lead to comparable AutoQ queries, which in turn support consistent AutoE evaluations.

AutoD also includes tools for summarizing input or output datasets in a way that reflects their topical coverage. These summaries play an important role in the AutoQ query synthesis process, but they can also be used more broadly, such as in prompts where context space is limited.

Since the release of the GraphRAG paper, we’ve received many requests to share the dataset of the Behind the Tech (opens in new tab) podcast transcripts we used in our evaluation. An updated version of this dataset is now available in the BenchmarkQED repository (opens in new tab), alongside the AP News dataset containing 1,397 health-related articles, licensed for open release.

We hope these datasets, together with the BenchmarkQED tools (opens in new tab), help accelerate benchmark-driven development of RAG systems and AI question-answering. We invite the community to try them on GitHub (opens in new tab).