Large language models (LLMs) can solve complex puzzles in seconds, yet they sometimes struggle over simple conversations. When these AI tools make assumptions, overlook key details, or neglect to ask clarifying questions, the result can erode trust and derail real-world interactions, where nuance is everything.

A key reason these models behave this way lies in how they’re trained and evaluated. Most benchmarks use isolated, single-turn prompts with clear instructions. Training methods tend to optimize for the model’s next response, not its contribution to a successful, multi-turn exchange. But real-world interaction is dynamic and collaborative. It relies on context, clarification, and shared understanding.

User-centric approach to training

To address this, we’re exploring ways to train LLMs with users in mind. Our approach places models in simulated environments that reflect the back-and-forth nature of real conversations. Through reinforcement learning, these models improve through trial and error, for example, learning when to ask questions and how to adapt tone and communication style to different situations. This user-centric approach helps bridge the gap between how LLMs are typically trained and how people actually use them.

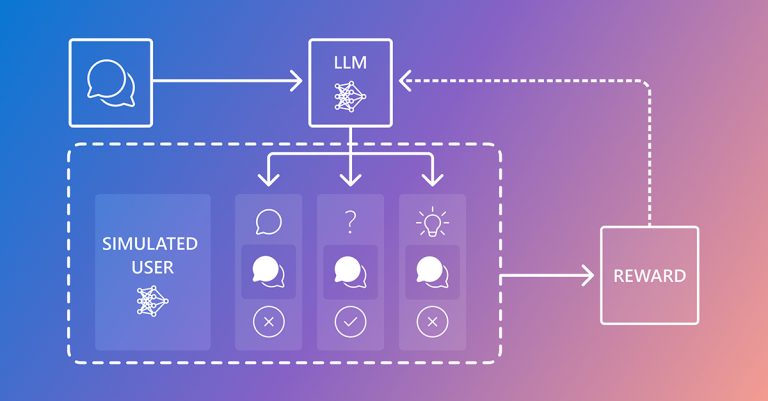

This is the concept behind CollabLLM (opens in new tab), recipient of an ICML (opens in new tab) Outstanding Paper Award (opens in new tab). This training framework helps LLMs improve through simulated multi-turn interactions, as illustrated in Figure 1. The core insight behind CollabLLM is simple: in a constructive collaboration, the value of a response isn’t just in its immediate usefulness, but in how it contributes to the overall success of the conversation. A clarifying question might seem like a delay but often leads to better outcomes. A quick answer might appear useful but can create confusion or derail the interaction.

CollabLLM puts this collaborative approach into practice with a simulation-based training loop, illustrated in Figure 2. At any point in a conversation, the model generates multiple possible next turns by engaging in a dialogue with a simulated user.

The system uses a sampling method to extend conversations turn by turn, choosing likely responses for each participant (the AI agent or the simulated user), while adding some randomness to vary the conversational paths. The goal is to expose the model to a wide variety of conversational scenarios, helping it learn more effective collaboration strategies.

About Microsoft Research

Advancing science and technology to benefit humanity

To each simulated conversation, we applied multiturn-aware reward (MR) functions, which assess how the model’s response at the given turn influences the entire trajectory of the conversation. We sampled multiple conversational follow-ups from the model, such as statements, suggestions, questions, and used MR to assign a reward to each based on how well the conversation performed in later turns. We based these scores on automated metrics that reflect key factors like goal completion, conversational efficiency, and user engagement.

To score the sampled conversations, we used task-specific metrics and metrics from an LLM-as-a-judge framework, which supports efficient and scalable evaluation. For metrics like engagement, a judge model rates each sampled conversation on a scale from 0 to 1.

The MR of each model response was computed by averaging the scores from the sampled conversations, originating from the model response. Based on the score, the model updates its parameters using established reinforcement learning algorithms like Proximal Policy Optimization (PPO) or Direct Preference Optimization (DPO).

We tested CollabLLM through a combination of automated and human evaluations, detailed in the paper. One highlight is a user study involving 201 participants in a document co-creation task, shown in Figure 3. We compared CollabLLM to a baseline trained with single-turn rewards and to a second, more proactive baseline prompted to ask clarifying questions and take other proactive steps. CollabLLM outperformed both, producing higher-quality documents, better interaction ratings, and faster task completion times.

Designing for real-world collaboration

Much of today’s AI research focuses on fully automated tasks, models working without input from or interaction with users. But many real-world applications depend on people in the loop: as users, collaborators, or decision-makers. Designing AI systems that treat user input not as a constraint, but as essential, leads to systems that are more accurate, more helpful, and ultimately more trustworthy.

This work is driven by a core belief: the future of AI depends not just on intelligence, but on the ability to collaborate effectively. And that means confronting the communication breakdowns in today’s systems.

We see CollabLLM as a step in that direction, training models to engage in meaningful multi-turn interactions, ask clarifying questions, and adapt to context. In doing so, we can build systems designed to work with people—not around them.